OpenAI's new benchmark tests ChatGPT's knowledge of Indian culture

What's the story

OpenAI has launched a new benchmark called 'IndQA' to evaluate how well artificial intelligence (AI) models understand and reason about questions related to Indian culture and everyday life in local languages. The move is aimed at improving the language capabilities of AI systems, especially when it comes to understanding context, culture, history, and other important aspects of people's lives in their respective regions.

Benchmark evolution

IndQA addresses shortcomings of existing benchmarks

In a blog post shared today, OpenAI announced the launch of IndQA. The organization noted that while it intends to create similar benchmarks for other languages and regions, India was an obvious starting point. The new benchmark addresses the shortcomings of existing ones which mostly focus on translation or multiple-choice tasks and don't capture culturally nuanced, reasoning-heavy tasks.

Comprehensive scope

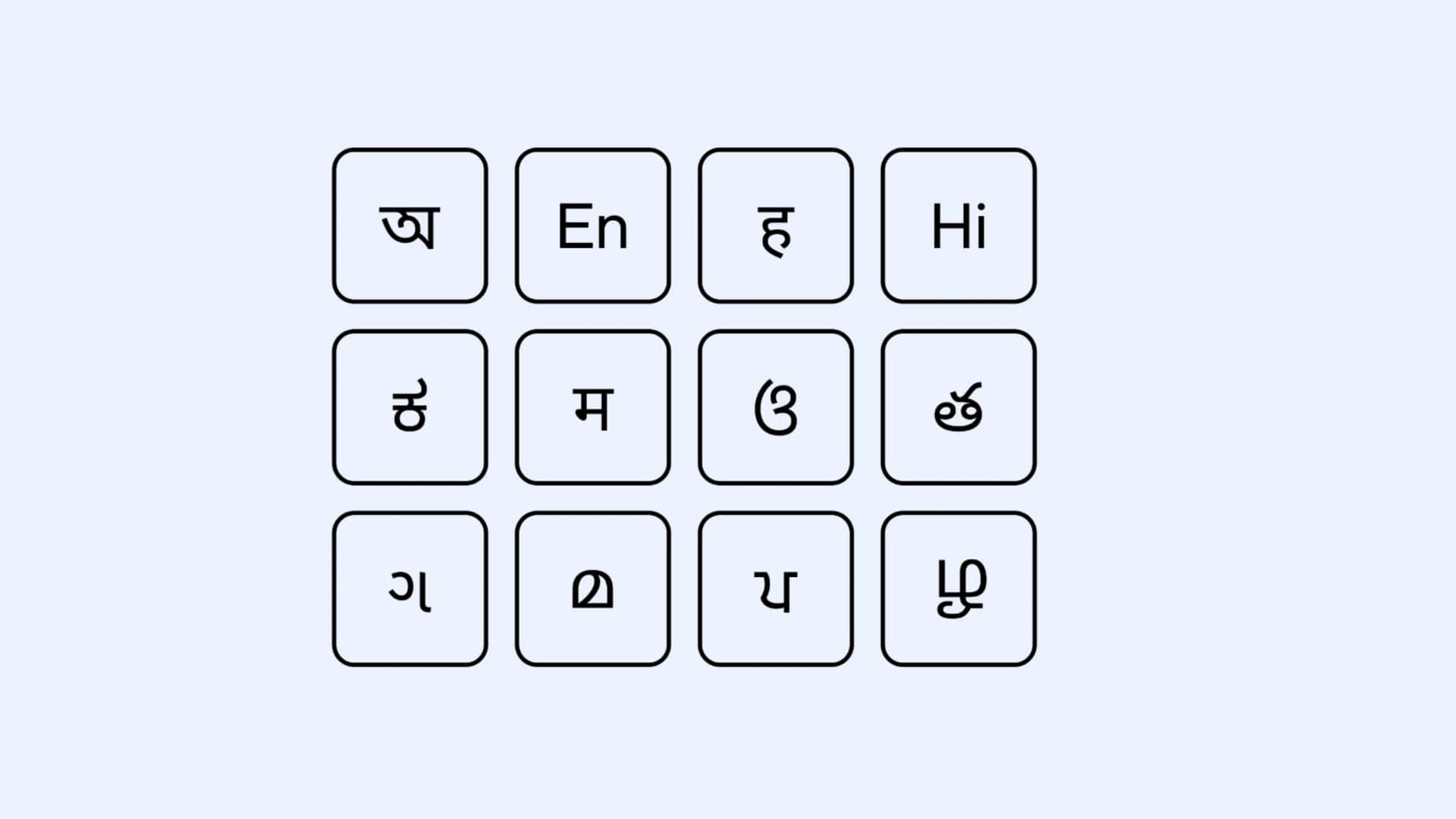

Covers 12 Indian languages and a wide range of domains

IndQA covers 12 languages, including Bengali, English, Hindi, Hinglish, Kannada, Marathi, Odia, Telugu, Gujarati, Malayalam, Punjabi, and Tamil. It tests AI models across different domains such as Architecture & Design; Arts & Culture; Everyday Life; Food & Cuisine; History; Law & Ethics; Literature & Linguistics; Media & Entertainment; Religion & Spirituality; Sports & Recreation. The benchmark features a wide range of questions developed in collaboration with 261 domain experts from all over India.

Scoring mechanism

Questions were adversarially filtered to ensure quality

IndQA employs a rubric-based approach to score responses against criteria set by domain experts. The questions were created by hiring experts in India across 10 domains who wrote complex, reasoning-focused prompts. These questions were then 'adversarially filtered' by testing them against OpenAI's top models to identify and retain only those that the models couldn't answer satisfactorily.