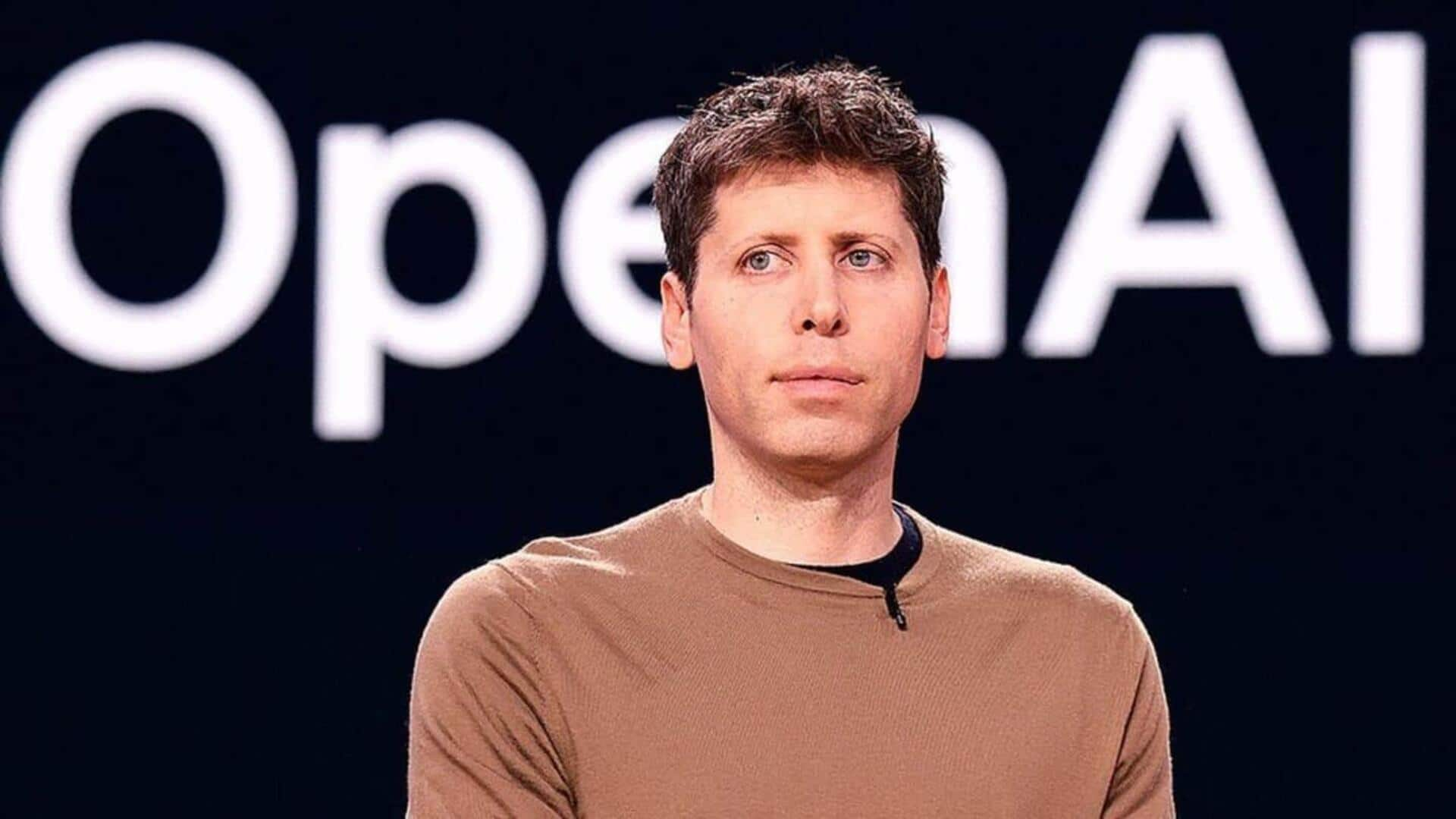

Sam Altman 'uneasy' about people using ChatGPT for life decisions

What's the story

Sam Altman, the CEO of OpenAI, has expressed his discomfort over people relying on ChatGPT for major life decisions. In a post on X, he said that while many people use ChatGPT as a therapist or life coach, he is uneasy about the future where people may heavily rely on its advice for important decisions.

Twitter Post

Take a look at Altman's post

If you have been following the GPT-5 rollout, one thing you might be noticing is how much of an attachment some people have to specific AI models. It feels different and stronger than the kinds of attachment people have had to previous kinds of technology (and so suddenly…

— Sam Altman (@sama) August 11, 2025

AI concerns

OpenAI 'closely tracking' user attachment to AI models

Altman admitted that while the reliance on ChatGPT's advice could be beneficial, it also makes him uneasy. He said OpenAI has been "closely tracking" how people develop attachments to their AI models and their reactions when older versions are deprecated. He warned against self-destructive use of technology including AI, especially by users who are mentally fragile or prone to delusion.

User safety

Altman's warning on potential harm of ChatGPT

Altman stressed that while most ChatGPT users can differentiate between reality and fiction or role-play, a small minority cannot. He warned that ChatGPT could be harmful if it steers people away from their "longer-term well-being." This statement, he clarified, was his personal opinion and not an official position of OpenAI.

Model feedback

Backlash over GPT-5 launch

Altman also addressed the backlash from some ChatGPT users after the recent launch of GPT-5. Some users have even requested OpenAI to bring back older models like GPT-4o. They took to social media platforms to express their dissatisfaction with GPT-5, claiming its responses were too "flat" and lacked creativity.

Legal concerns

Legal implications of using ChatGPT as therapist

In a podcast last month, Altman had also raised concerns over the legal implications of using ChatGPT as a personal therapist. He said OpenAI could be compelled to produce users' therapy-style chats in lawsuits. "So if you go talk to ChatGPT about your most sensitive stuff and then there's like a lawsuit or whatever, we could be required to produce that, and I think that's very screwed up," Altman said.