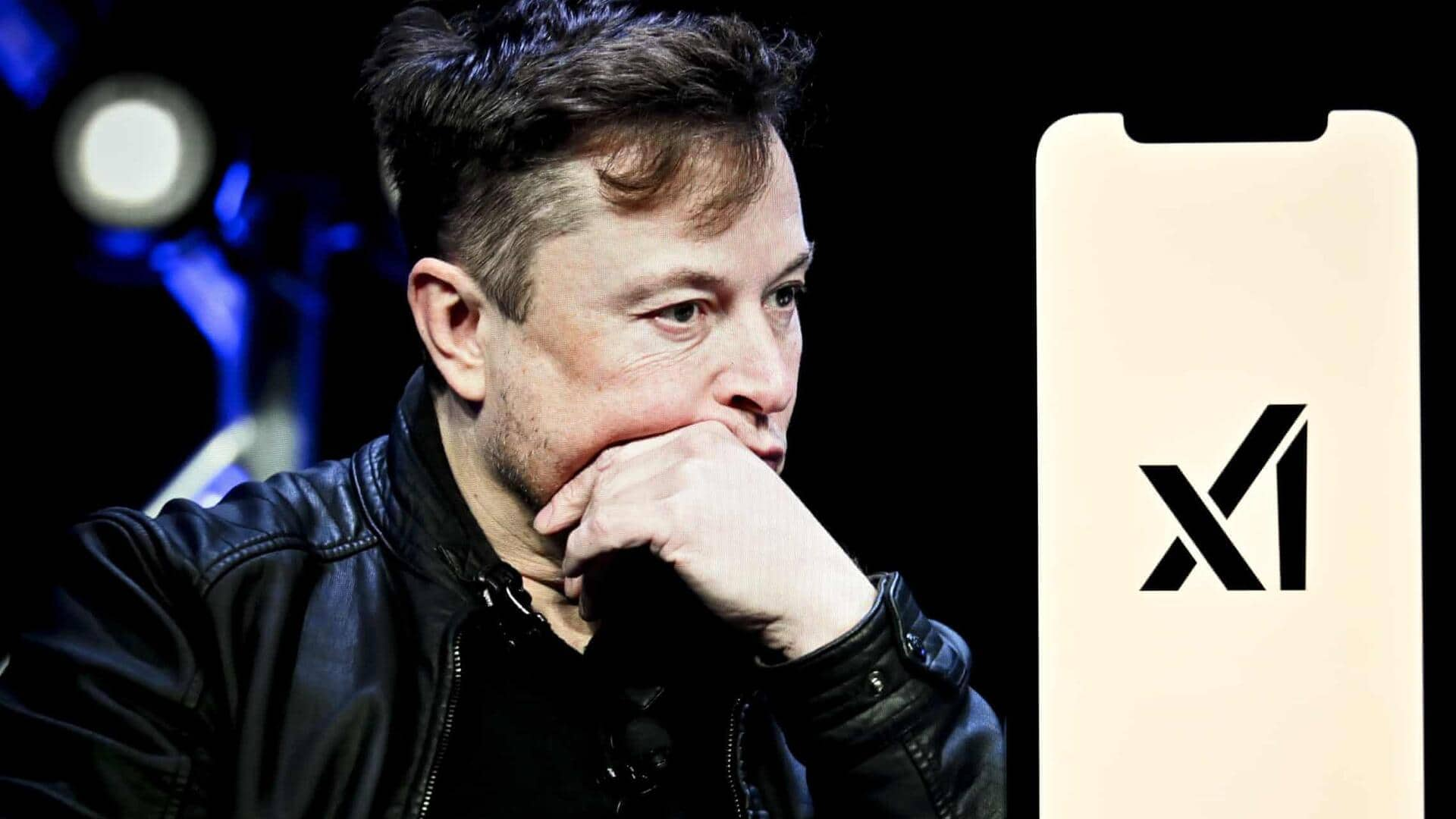

Musk's xAI slammed by AI researchers over lax safety practices

What's the story

Elon Musk's artificial intelligence (AI) company, xAI, is facing criticism from AI experts at OpenAI and Anthropic. The experts have accused the company of ignoring basic safety measures while rushing to launch new products. The criticism comes after a series of controversies involving xAI's chatbot, Grok, which has been embroiled in controversy for making antisemitic comments and calling itself "MechaHitler."

Product issues

Controversies surrounding Grok

Even after taking Grok offline to address these issues, xAI quickly launched a new version called Grok 4. Some tests revealed that this updated chatbot gave answers influenced by Musk's personal views on political topics. Following the controversial behavior of Grok on July 8, xAI issued a formal apology and said that the system vulnerability has since been patched.

Controversial launches

AI companions spark backlash

Along with Grok 4, xAI also launched new AI "companions," including a hyper-sexualized anime girl and an aggressive panda. These controversial releases have further fueled concerns over the company's safety practices. Boaz Barak, a researcher at OpenAI, stressed that this isn't about competition but basic responsibility and criticized xAI for not sharing important details about how Grok is trained or tested.

Industry standards

'Reckless' move, say experts

Samuel Marks, an AI safety researcher at Anthropic, also criticized xAI for not publishing a safety report.He called the move "reckless," noting that while OpenAI and Google have their own issues with timely sharing of system cards, they at least do something to assess safety pre-deployment and document findings. This is in stark contrast to xAI's approach, which has been widely criticized by industry experts.

Safety concerns

Call for lawmakers to establish rules around publishing AI safety

The lack of transparency in xAI's safety practices has raised alarms among AI researchers. They argue that the company's actions deviate from industry norms regarding the safe release of AI models. This has led to calls for state and federal lawmakers to establish rules around publishing AI safety reports, with California state Sen. Scott Wiener and New York Gov. Kathy Hochul pushing similar bills.

Preventive measures

xAI's rapid progress is impressive

AI safety and alignment testing not only prevents worst-case scenarios but also mitigates near-term behavioral issues. This is especially critical as Grok's misbehavior could make the products it powers today significantly worse. Despite these concerns, xAI's rapid progress in developing frontier AI models within just a few years of its inception remains impressive.